Last Update:

Dec 20, 2025

Share

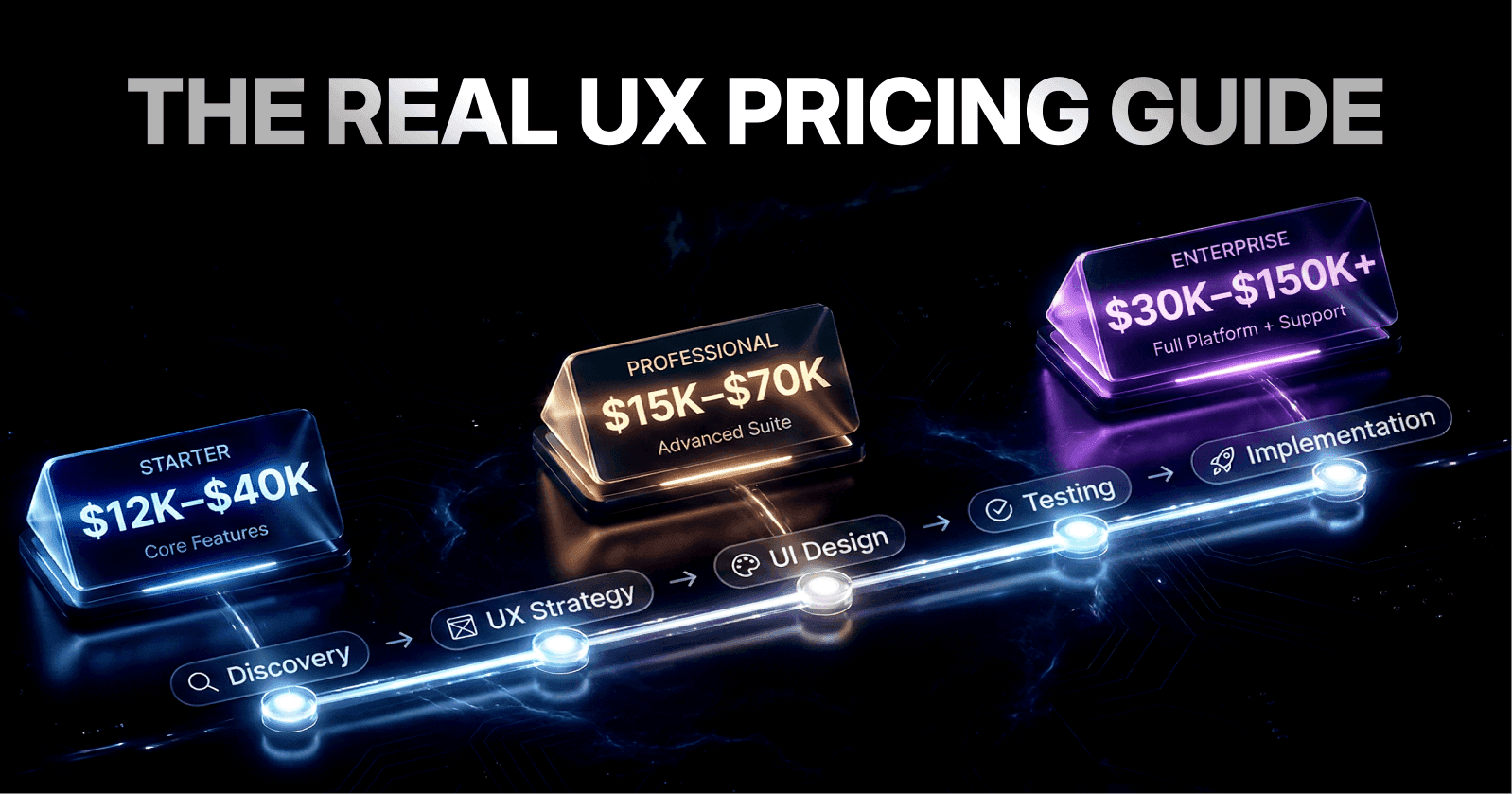

Global AI market in life sciences reaches $2.45 billion in 2024, projected to surge to $15.46 billion by 2034 with 20.23% CAGR

UX design accounts for 50% of competitive advantage in healthcare digital products and 33% of customer churn prevention

Regulatory compliance adds 40-60% to development timelines—majority attributed to UX validation and documentation requirements

Multi-stakeholder engagement achieves 3.2x higher adoption rates by involving researchers, clinicians, patients, and regulatory specialists

Compliance requirements increase interaction cost 25-35% but reduce error rates by 60-70% in validated healthcare systems

Strategic friction inverts consumer principles—deliberate barriers in high-risk workflows while removing friction from routine tasks

Post-market surveillance detects model drift 45 days earlier through systematic override tracking versus accuracy-only monitoring

Companies investing 15-20% of budgets in UX capture 2-3x market share through products achieving 60-70% lower error rates

95% of healthcare apps never sustain usage—failure stems from poor workflow integration and inadequate stakeholder involvement

Comprehensive UX methodology increases costs 15-25% but reduces total ownership costs 40-60% through lower support and higher retention

Introduction

The life sciences industry stands at a technological inflection point. The global AI market in life sciences reached USD 2.45 billion in 2024 and is projected to surge to USD 15.46 billion by 2034, with a compound annual growth rate of 20.23%. North America commands 41.80% market share, while Europe accelerates at 27.90% CAGR.

Yet market growth alone doesn't guarantee success. According to Gartner, UX design accounts for 50% of competitive advantage in healthcare digital products and 33% of customer churn prevention. The critical differentiator is user experience.

Life science software operates within a uniquely constrained ecosystem defined by regulatory rigor, safety criticality, complex workflows, and heterogeneous user bases. The stakes involve patient safety, research integrity, and regulatory compliance—not merely user satisfaction.

The Nielsen Norman Group emphasizes that

"even minor usability issues can have grave consequences, affecting not just user satisfaction but clinical outcomes."

This guide explores the essential UX considerations distinguishing successful life science AI SaaS products, examining structural differences from generic software and the design imperatives underpinning regulatory compliance, user adoption, and operational excellence.

Part 1: Generic SaaS vs. LifeScience AI SaaS—Fundamental UX Differences

1.1 Why Generic SaaS Principles Fall Short

The broader SaaS industry prioritizes simplicity, intuitive navigation, low learning curves, and rapid onboarding—minimizing activation friction to maximize engagement and reduce support costs.

Life science AI SaaS operates in a regulatory and clinical environment where these priorities are inverted. While poorly designed consumer SaaS frustrates users and increases churn, poorly designed life science applications compromise patient outcomes, invalidate research, trigger regulatory action, and expose organizations to liability.

The FDA's Human Factors Engineering guidance states:

"FDA is primarily concerned that devices are safe and effective for the intended users, uses, and use environments, emphasizing that user-friendliness is secondary to safety."

Stanford HCI research demonstrates that cognitive load management in clinical settings requires design patterns prioritizing error prevention over efficiency, reversing typical consumer software priorities.

Micro-Summary

Generic SaaS principles fail in life sciences because the primary design constraint shifts from user satisfaction to patient safety and regulatory compliance, requiring fundamentally different methodologies and risk prioritization frameworks.

Get a comprehensive UX audit to identify compliance gaps.

1.2 Regulatory Compliance as Design Foundation

Generic SaaS navigates data privacy regulations like GDPR. Life science AI SaaS faces a fundamentally different compliance landscape that shapes core UX architecture.

McKinsey research shows regulatory compliance adds 40-60% to development timelines for healthcare software, with the majority attributed to UX validation and documentation.

21 CFR Part 11: Electronic Records Foundation

21 CFR Part 11 requires authentication mechanisms, audit trails, electronic signatures, data export capabilities, and user access controls—all transparently integrated without compromising workflow efficiency.

Definition: 21 CFR Part 11 is an FDA regulation establishing requirements for electronic records and signatures, mandating authentication, audit trails, validation, and data integrity controls embedded into core system design.

HIPAA and FDA Requirements

HIPAA mandates role-based access control, comprehensive encryption, multi-factor authentication, and detailed audit logging. MIT Technology Review notes that "HIPAA-compliant design requires 3-4x more UX iterations than standard enterprise software."

FDA Human Factors Engineering establishes formal validation requiring documented user research, use-related risk analysis, formative evaluation, and summative validation with predefined success criteria.

The Baymard Institute found that compliance requirements increase interaction cost by 25-35% but reduce error rates by 60-70% in validated healthcare systems.

Micro-Summary

Regulatory compliance—spanning 21 CFR Part 11, HIPAA, and FDA HFE—transforms UX from optimization to foundational architecture, requiring embedded audit trails, formal validation, and design patterns prioritizing traceability over efficiency.

Explore our product design services for regulatory-compliant solutions.

1.3 User Heterogeneity and Role-Specific Workflows

Generic SaaS typically serves homogeneous user populations—project management tools serve project managers, CRMs serve salespeople. Life science AI SaaS serves radically heterogeneous groups simultaneously, each with distinct technical expertise, workflow priorities, and safety-critical responsibilities.

A single clinical trial system must serve:

Researchers (requiring statistical capability and granular data control)

Coordinators (prioritizing patient recruitment)

Physicians (needing summary insights and compliance alerts)

Patients (with limited health literacy)

Regulatory specialists (focusing on audit trails)

NIH research shows 79.4% of clinicians identify feature gaps while 71.9% report usability frustrations, highlighting the challenge of serving diverse needs.

Harvard Business Review found that one-size-fits-all design creates major adoption barriers, with 68% of failed healthcare implementations attributed to inadequate role differentiation.

Definition: Role-Based Access Control (RBAC) is a security and UX pattern restricting system access and interface presentation based on user roles, ensuring individuals see only appropriate data and functions while maintaining compliance and reducing cognitive load.

Each role operates with different information hierarchies—what's critical for a clinician (patient safety alerts) differs fundamentally from a data scientist's priorities (statistical power and model accuracy).

Micro-Summary

Life science AI SaaS serves radically heterogeneous populations—researchers, clinicians, patients, regulatory specialists—requiring role-based interface design and personalized workflows accommodating conflicting priorities, a complexity rarely encountered in consumer SaaS.

1.4 Data Complexity and Analytical Sophistication

Generic SaaS works with structured, well-bounded datasets: customer records, transaction histories, project timelines. Life science AI SaaS handles fundamentally different data types, scales, and analytical requirements.

Drug discovery involves complex chemical structures, molecular modeling data, and experimental parameters. Clinical trials integrate patient demographics, lab results, imaging data, genomic information, and phenotypic data. Regeneron processed 1.5 million genome sequences, reducing queries from 30 minutes to 3 seconds.

Stanford's Data Science Institute notes that

"life science visualization requires domain-specific graphical languages preserving statistical integrity while enabling exploratory analysis, requiring 4-5x more design iteration than business dashboards."

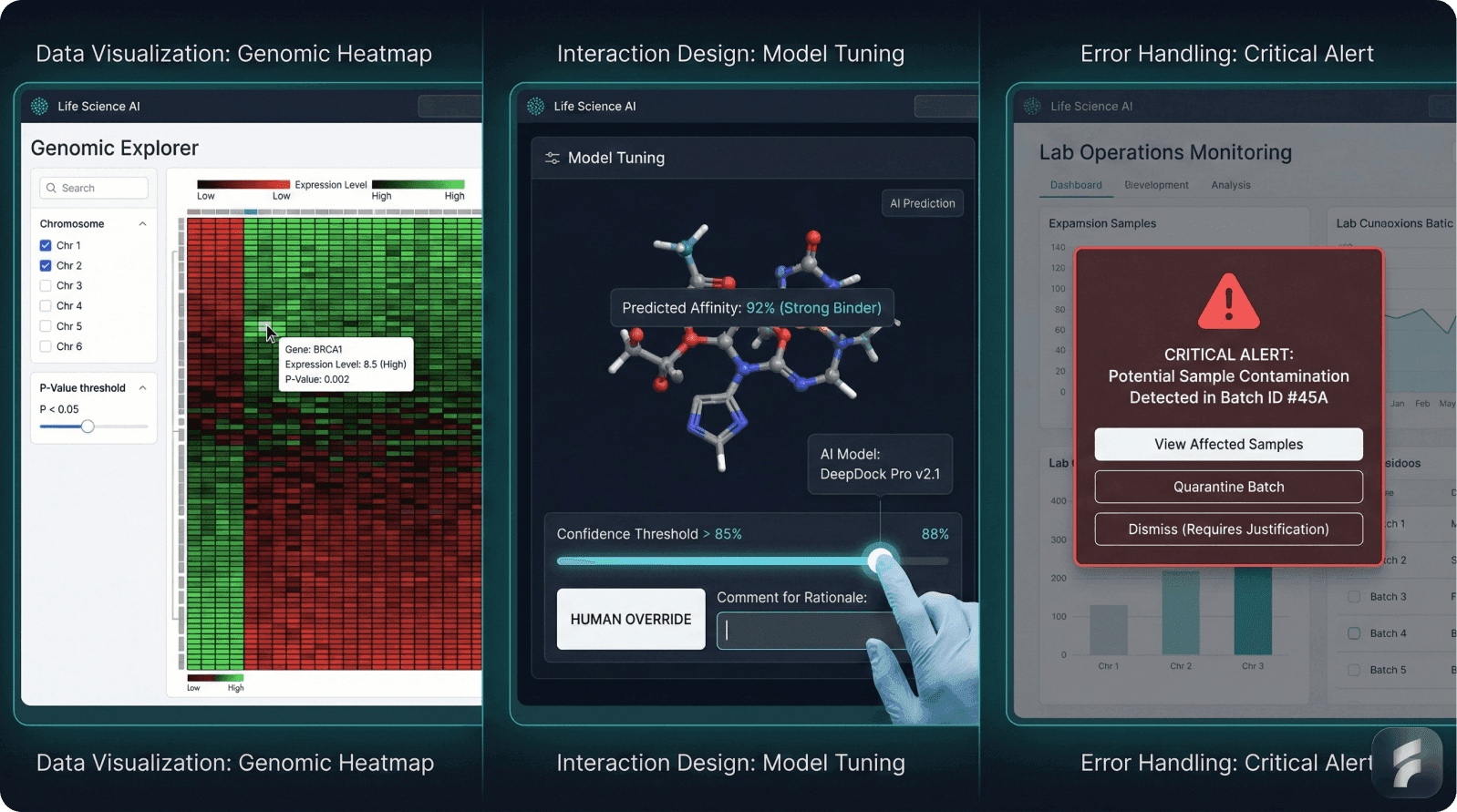

Life Science Visualization Types

Volcano plots: Differential expression analysis

Kaplan-Meier curves: Survival analysis

Interaction networks: Drug target relationships

Heatmaps: Gene expression patterns

MIT research indicates life science researchers spend 40-60% of analysis time on data preparation and visualization configuration, highlighting the importance of intuitive analytical interfaces.

Micro-Summary

Life science data complexity—molecular structures, genomic sequences, multi-dimensional clinical datasets—requires specialized visualization, sophisticated information architecture, and domain-specific analytical interfaces enabling exploratory research while maintaining statistical integrity.

1.5 Safety Criticality and Error Consequence Asymmetry

The most profound difference lies in error consequence asymmetry. In generic SaaS, user error causes lost time or data correction. In life science applications, error can alter research conclusions, compromise patient safety, invalidate clinical trials, or trigger regulatory sanctions.

Research in the Engineering Science & Technology Journal concluded that in medical contexts, even minor usability issues can have grave consequences. The FDA emphasizes strategies including clear visual hierarchy, consistent labeling, error anticipation, automated validation, and intelligent error alert systems.

Healthcare Information and Management Systems Society research shows these mechanisms reduce critical errors by 60-70% in clinical settings.

Definition: Friction Scoring is a UX methodology quantifying interaction difficulty by measuring steps, cognitive load, and decision points, used to deliberately introduce protective friction in high-risk workflows while minimizing it in routine operations.

The Nielsen Norman Group notes that

"Healthcare UX must embrace strategic friction—introducing deliberate barriers in high-risk workflows while removing friction from routine tasks, inverting the consumer software principle."

McKinsey demonstrates that while aggressive friction reduction improves retention curves in consumer software by 15-25%, the same approach in clinical software increases error rates by 40-60%, ultimately reducing adoption as trust erodes.

Micro-Summary

Error consequence asymmetry—where mistakes compromise patient safety rather than merely frustrating users—requires design patterns embracing strategic friction, implementing multi-layer error prevention, and prioritizing safety over efficiency, fundamentally inverting consumer SaaS philosophy.

UX Design Philosophy: General SaaS vs AI LifeScience SaaS

Dimension | General SaaS | AI LifeScience SaaS |

|---|---|---|

Primary Design Goal | User satisfaction, engagement, and retention through intuitive interfaces and minimal friction | Patient safety and regulatory compliance, with user-friendliness as secondary concern |

Error Consequences | User frustration, lost time, data correction, potential churn | Compromised patient safety, invalidated research, clinical trial failures, regulatory sanctions |

Regulatory Framework | GDPR and basic data privacy laws requiring standard security measures | 21 CFR Part 11, HIPAA, FDA Human Factors Engineering requiring formal validation, audit trails, and embedded compliance |

User Population | Homogeneous groups with similar technical expertise and workflow needs | Radically heterogeneous populations: researchers, clinicians, patients, regulatory specialists with conflicting priorities |

Friction Philosophy | Minimize all friction to maximize engagement and reduce activation barriers | Strategic friction—deliberate barriers in high-risk workflows while removing friction from routine tasks |

Data Complexity | Structured, well-bounded datasets: customer records, transaction histories | Multi-dimensional complexity: molecular structures, genomic sequences, clinical datasets requiring 4-5x more design iteration |

Validation Requirements | A/B testing, analytics-driven optimization, user feedback loops | FDA-mandated formal validation with documented user research, use-related risk analysis, formative and summative testing |

Design Timeline Impact | Standard development cycles with continuous deployment and rapid iteration | 40-60% longer development timelines due to regulatory compliance and UX validation requirements |

Interaction Cost | Minimize steps and cognitive load for all workflows to maximize efficiency | 25-35% higher interaction cost by design, but 60-70% reduction in error rates through protective mechanisms |

AI Transparency | Algorithm details often hidden; focus on seamless user experience | Mandated explainability with confidence bounds, clinical language explanations, override mechanisms, and visible governance |

Post-Launch Status | Continuous optimization based on usage patterns and feature requests | Mandatory post-market surveillance with FDA-required monitoring, model drift detection, and safety event response within 30-90 days |

Success Metrics | User engagement, retention curves, activation rates, time-to-value | 95% task completion for critical tasks, <5% error rates for high-risk interactions, regulatory approval, patient safety outcomes |

Part 2: Unique UX Considerations—Design Process and Strategic Implementation

2.1 Human-Centered Design as Strategic Foundation

Life science AI SaaS requires fundamentally different design approaches integrating human-centered principles with rigorous regulatory requirements, multi-stakeholder engagement, and iterative validation.

Human-centered design (HCD) is not cosmetic—it determines whether AI systems can be safely integrated into clinical and research workflows. Stanford's Center for Biomedical Informatics found that without HCD embedded from discovery through operation, even highly accurate AI models fail in practice, with deployment failure rates exceeding 70%.

Generic software treats UX as optimization. Life science AI SaaS treats UX as risk mitigation.

Core HCD Principles

Respect for Workflow Realities: Clinicians operate under time pressure, cognitive load, and constant interruptions. MIT research shows clinical workflows involve 12 interruptions per hour, requiring interfaces supporting resumption and maintaining context.

Transparency Without Oversimplification: AI outputs must be intelligible without requiring data science expertise while retaining sufficient detail for validation and override. NIST notes that

"effective AI transparency requires 3-4 levels of detail serving both clinical decision-makers and technical reviewers."

Equitable Design: Participatory co-design must actively involve diverse stakeholders. AHRQ research demonstrates that AI models developed without diverse patient input exhibit 25-40% higher error rates for underrepresented populations.

Micro-Summary

Human-centered design transcends aesthetic optimization to become foundational risk mitigation, requiring workflow respect, transparency calibrated to user expertise, visible governance, and equitable participatory design involving diverse stakeholders from discovery through deployment.

2.2 Multi-Stakeholder Engagement and Participatory Design

Life science UX requires interdisciplinary teams including data scientists, engineers, usability experts, healthcare professionals, and organizational stakeholders. This is operationally essential because different groups have genuinely conflicting UX priorities.

Stakeholder | Primary Priorities | Design Implications |

|---|---|---|

Researchers | Data granularity, analytical flexibility | Complex query builders, statistical documentation |

Clinicians | Speed, decision clarity, workflow integration | Streamlined interfaces, summary insights |

Patients | Accessibility, understandability | Plain language, privacy transparency |

Regulatory | Audit trails, documentation completeness | Detailed logging, signature workflows |

IT/Compliance | Data security, system stability | Permission modeling, encryption visibility |

Gartner research shows healthcare projects engaging all five stakeholder groups achieve 3.2x higher adoption rates and 2.7x lower error rates.

NIH emphasizes that

"Authentic patient involvement produces fundamentally different design priorities than proxy users, with patient co-designers identifying 40-60% more usability issues."

Definition: Participatory Design is a methodology positioning users as active co-creators rather than passive feedback providers, involving them in problem definition, solution generation, and validation to surface insights invisible to design professionals.

Implementation requires structured participatory workshops, continuous feedback loops throughout development, and cross-functional collaboration infrastructure. Nielsen Norman Group research shows continuous feedback reduces critical defect rates by 55-65% compared to phase-gated validation.

Micro-Summary

Multi-stakeholder engagement transforms design from expert-driven to participatory, treating diverse populations as co-creators whose conflicting priorities must be balanced through structured workshops, continuous feedback, and cross-functional collaboration surfacing insights invisible to homogeneous teams.

Discover our product design approach for multi-stakeholder systems.

2.3 Agile UX Integrated with Regulatory Validation

Generic Agile prioritizes speed and adaptability. Life science AI SaaS requires integrating Agile with formal validation requirements of FDA regulation and clinical governance.

Agile UX integrates user experience design with Agile software development, enabling rapid iteration while maintaining user-centered focus. However, regulated contexts require adapting standard practices to accommodate formal validation.

Sprint-Integrated Timeline

Sprint N-1: Research & Concept

User research, use-related risk analysis, critical task identification, and participatory design sessions. HIMSS research shows front-loading risk analysis reduces downstream validation failures by 40-50%.

Sprint N: Design & Validation

Design refinement and formative evaluation while developers implement previous designs.

Sprint N+1: Validation & Launch

Summative validation with predefined success criteria, final implementation, and post-launch monitoring frameworks.

Definition: Formative vs. Summative Evaluation - Formative evaluation is rapid, iterative testing throughout development identifying usability issues; summative validation is comprehensive pre-launch testing with predefined success criteria demonstrating regulatory compliance.

Multi-Scale Iteration

Iterations operate at multiple time scales:

Micro-iterations (Daily/Weekly): Quick refinements, small usability tests, analytics-driven optimization

Sprint-Level (2–4 Weeks): Feature design cycles, user feedback integration, design system updates

Release-Level (Monthly/Quarterly): Major launches with comprehensive evaluation, user research synthesis, strategy adjustments

MIT research shows multi-scale iteration reduces time-to-validation by 30-40% while improving defect detection by 45-55%.

Micro-Summary

Agile UX in life sciences integrates rapid iteration with regulatory validation through multi-scale cycles—daily micro-iterations, 2-4 week sprints, and quarterly release validations—enabling simultaneous progress on research, design, and development while maintaining formal documentation and risk-driven prioritization for FDA compliance.

2.4 Use-Related Risk Analysis as Design Driver

The foundation of FDA-compliant life science UX is systematic use-related risk analysis (URRA)—a formal process identifying how users might interact with systems in ways that could cause harm.

URRA is not post-hoc assessment. It's a design methodology shaping UX architecture from discovery through launch. FDA guidance requires URRA initiated early and continuously refined as design evolves.

URRA Core Components

User and Use Environment Analysis: Comprehensive documentation of user populations, technical proficiency, work contexts, and stressors. HIMSS indicates comprehensive use environment analysis identifies 35-45% more risk scenarios than persona-only approaches.

Hazard Identification: Systematic brainstorming using FMEA (Failure Mode and Effects Analysis) to identify failure modes: data misinterpretation, unauthorized access, incorrect predictions, inadvertent deletions.

Definition: FMEA is a systematic methodology for identifying potential failure modes, assessing severity and probability, and prioritizing risk mitigation based on quantified scores guiding design decisions.

Critical Task Identification: Determining which tasks could result in serious harm if performed incorrectly. McKinsey shows organizations identifying critical tasks during URRA allocate 60-70% of UX resources to high-risk interactions.

Risk Quantification: Assessment of severity and probability enabling design prioritization based on risk rather than feature equivalence.

Risk Priority Calculation

The critical shift is that design decisions are risk-driven rather than feature-driven. Nielsen Norman Group notes that

"Risk-driven design often requires 5-10x more iteration on high-risk, low-complexity interactions."

Micro-Summary

Use-related risk analysis transforms from post-hoc assessment to foundational methodology by systematically identifying users, hazards, critical tasks, and risk quantification that drives design prioritization, resource allocation, and multi-layer mitigation based on consequence severity rather than feature complexity.

2.5 Transparent AI Integration and Explainability Design

Life science AI SaaS increasingly leverages machine learning for prediction, pattern detection, and decision support. However, UX design must ensure users never blindly trust algorithmic outputs.

Multistakeholder research emphasizes that patients and healthcare professionals require transparency into how AI models function and must be involved in algorithm development. FDA guidance for AI/ML-enabled clinical decision support requires documentation of how models function and evidence of reliable performance.

Transparency Design Requirements

Clear Predictions with Confidence Bounds: Display "73% probability of developing heart failure within 12 months" with factors influencing the prediction, rather than deterministic statements. MIT research shows displaying confidence intervals increases clinician trust by 35-45% and reduces over-reliance by 25-30%.

Explainability Without Data Science Training: Present model explanations in clinical language. Rather than feature importance scores, use "this prediction was primarily influenced by recent lab values, patient age, and past hospital admissions."

Definition: SHAP is an explainable AI technique calculating feature importance by determining how much each input variable contributed to a specific prediction, enabling transparent communication to non-technical users.

NIST notes that "70-80% of clinicians prefer natural language explanations over mathematical visualizations."

Explicit Override Mechanisms: Clinical judgment must remain paramount. Design must enable clinicians to override recommendations with clear documentation—creating feedback improving the system over time.

Harvard Business Review emphasizes that

"Optimal design balances model accuracy with user comprehension, accepting 2-5% accuracy reductions to achieve 40-60% improvements in understanding and appropriate trust calibration."

Micro-Summary

Transparent AI integration requires displaying predictions with confidence bounds, translating explanations into clinical language, enabling comparison with alternatives, making updates visible, and providing explicit override mechanisms respecting clinical judgment while building system intelligence through documented feedback.

2.6 Compliance Integration Without Workflow Disruption

Life science applications must embed regulatory compliance into workflows without grinding work to a halt. The challenge is making compliance invisible during normal operation while maintaining full traceability.

Electronic Signatures: Clinicians can't pause work to invoke signing ceremonies for every data entry. Yet every change must be traceable. The solution is intelligent automation: background logging without user action, with formal signatures required only for submission events. HIMSS research shows well-designed signature workflows reduce signing time by 60-70% while improving compliance from 75-85% to 95-99%.

Role-Based Access Control: Users should be unaware of access controls during normal operation. When encountering restrictions, the UX must clearly explain why information is unavailable and what authorization is required. Nielsen Norman Group notes "effective RBAC is invisible until violated, reducing access-related support requests by 50-60%."

Data Validation: Real-time validation prevents invalid entries at input rather than discovering errors during analysis. MIT research shows real-time validation reduces data quality issues by 70-80% and decreases time-to-analysis by 40-50%.

The design principle is making invisible compliance mechanisms visible only when needed—reducing cognitive load while maintaining regulatory defensibility.

Micro-Summary

Regulatory compliance integration achieves invisibility during normal operation through intelligent automation of signatures and audit trails, role-based access visible only when violated, real-time validation preventing errors at input, and accessible audit interfaces enabling verification without database expertise, maintaining zero friction for compliant workflows.

2.7 Post-Market Surveillance and Continuous Learning

Life science AI is never "finished." Clinical guidelines evolve, populations change, care pathways are reconfigured, new risks emerge, and AI models drift.

Effective UX design must incorporate feedback loops, accountability structures, and data stewardship enabling continuous improvement. FDA guidance requires medical devices maintain active monitoring of real-world performance with mechanisms detecting and responding to safety issues within 30-90 days.

Operationalized Monitoring

Performance Monitoring: Track how frequently users override AI recommendations, what error rates occur, and whether specific populations have worse outcomes. NIH research shows systematic override tracking identifies model drift 45 days earlier than accuracy-only monitoring.

Definition: Model Drift is the degradation of machine learning model performance over time as statistical properties of input data change, requiring continuous monitoring and periodic retraining to maintain accuracy and safety.

User Feedback Integration: Low-friction channels for reporting problems, requesting features, or flagging concerning outputs. McKinsey indicates visible feedback loops increase user trust by 35-45% and double voluntary error reporting.

Transparency into Updates: Document what changes were made, why, and what impact is expected, demonstrating that the system evolves based on user input.

Governance Visibility: Make decision-making about updates, retraining, and feature changes visible to users. Stanford research shows transparent governance increases regulatory audit success by 40-50% and reduces post-market safety events by 30-40%.

Harvard Business Review notes that

"Organizations treating post-market surveillance as core functionality rather than regulatory obligation achieve 2.5-3x lower adverse event rates and 1.8-2.2x higher long-term adoption."

Micro-Summary

Post-market surveillance requires operationalized performance monitoring detecting model drift 45 days earlier, low-friction feedback channels doubling reporting, transparent update documentation, comparative analysis enabling degradation detection, and visible governance increasing audit success by 40-50% while recognizing that safety depends on continuous improvement not frozen perfection.

Part 3: Market Imperatives and Evidence-Based Implementation

3.1 Market Adoption as a UX Problem

Despite robust market growth in life science AI, actual end-user adoption remains problematic. Digital health app adoption rates don't exceed 8% of the population, with 95% of downloaded healthcare apps never used or used only once.

A study of EHR systems found that even as adoption reached 75% of hospitals, complaints about poor workflow integration remained pervasive. HIMSS research shows clinicians spend 35-40% of their time on EHR navigation, with 60-70% reporting that current systems increase cognitive load.

This adoption gap is not a technology problem—it's a UX problem.

UX design accounts for 50% of competitive advantage in healthcare digital products and 33% of customer churn prevention. The Nielsen Norman Group emphasizes that

"In mature healthcare markets, UX becomes the primary differentiator because algorithmic parity exists across competitors."

UX Impact on Market Performance

Adoption rates: 3-5x higher with excellent UX

Time-to-productivity: 50-60% reduction

Error rates: 60-70% decrease

Support costs: 40-50% reduction

Retention rates: 35-45% increase

The market opportunity for life science AI SaaS lies not in algorithmic sophistication but in UX enabling rapid adoption and workflow integration. McKinsey shows companies investing 15-20% of development budgets in UX research capture 2-3x market share compared to competitors investing < 5%.

Micro-Summary

Market adoption failures—with 95% of healthcare apps unused and 75% of EHR users reporting workflow problems—stem from UX deficiencies rather than technology gaps, creating opportunities for organizations investing 15-20% of budgets in rigorous user-centered design to capture 2-3x market share through products achieving 3-5x higher adoption and 60-70% lower error rates.

3.2 Implementation Principles: From Research to Validation

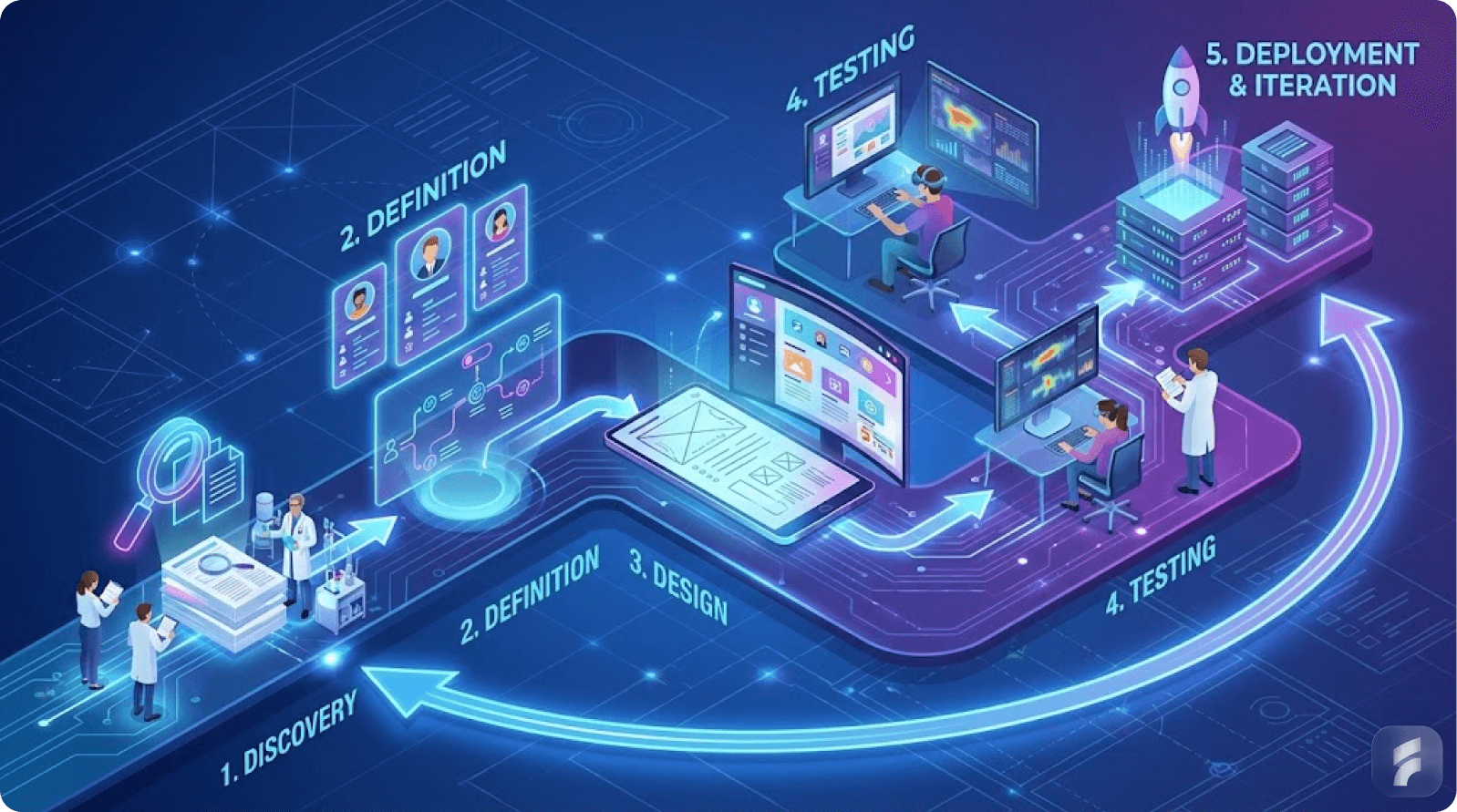

Successful life science AI SaaS products implement a structured, evidence-based UX methodology spanning six phases. Each phase builds on previous work while maintaining iterative feedback loops.

Stanford research shows organizations following structured UX methodologies achieve 40-50% faster time-to-regulatory-approval and 30-40% higher first-year adoption rates compared to ad-hoc approaches.

Six-Phase Methodology

Phase 1: User Research and Contextual Inquiry

Conducted with representative users in authentic work environments, capturing how they currently solve problems, workarounds employed, information priorities, and errors encountered. Nielsen Norman Group emphasizes "contextual inquiry identifies 50-60% more usability requirements than remote interviews because observed behavior differs substantially from reported behavior."

Phase 2: Use-Related Risk Analysis and Problem Definition

Formal documentation of potential use errors and resulting harms, with systematic identification of critical tasks and risk levels. MIT research shows formal problem reframing increases solution quality by 35-45% and reduces feature bloat by 40-50%.

Definition: How Might We Framework is a problem reframing technique transforming challenges into opportunities by converting "How can we build X feature?" into "How might we help users achieve Y outcome?", shifting focus from solution implementation to user need fulfillment.

Phase 3: Participatory Design and Iterative Prototyping

Users co-create design solutions through facilitated sessions and rapid prototyping. Harvard Business Review notes

"Participatory design generates 3-4x more viable alternatives than expert-only brainstorming, with user-generated solutions achieving 40-50% higher satisfaction."

Phase 4: Formative Evaluation and Development Integration

Concurrent formative evaluation throughout sprints identifies usability issues early. AHRQ research shows continuous formative evaluation reduces critical defects by 55-65% and decreases validation rework by 60-70%.

Phase 5: Summative Human Factors Validation Testing

Formal testing with predefined success criteria, objective measurement, representative participants, and realistic use conditions. FDA guidance requires 95% task completion rates for critical tasks and < 5% error rates for high-risk interactions.

Phase 6: Post-Market Surveillance and Continuous Improvement

Ongoing monitoring of user interactions, error rates, and performance enables rapid identification of emerging issues. McKinsey shows mature post-market surveillance detects safety issues 45-60 days earlier and resolves them 30-40% faster.

Stanford indicates comprehensive UX methodology increases development costs by 15-25% but reduces total ownership costs by 40-60% through lower support costs and higher retention (link: UX Optimization).

Micro-Summary: Evidence-based implementation follows six phases—contextual inquiry in authentic environments, formal risk analysis and problem reframing, participatory prototyping with users as co-creators, continuous formative evaluation, summative validation demonstrating compliance, and post-market surveillance enabling improvement—with comprehensive methodology increasing development costs 15-25% while reducing total ownership costs 40-60%.

Conclusion

Life science AI SaaS represents one of the highest-stakes applications of software design. The products emerging from this industry will enable discovery of new treatments, acceleration of clinical trials, and improvements in patient care. Alternatively, they will contribute to research delays, trial failures, and regulatory setbacks.

The difference between success and failure is not algorithmic. Most life science AI products have comparable machine learning sophistication. The difference is user experience: whether the product integrates seamlessly into complex workflows, whether it surfaces critical information intuitively, whether it enables users to maintain compliance automatically, and whether it builds user trust through transparency and error prevention.

The unique considerations for life science AI SaaS—human-centered design methodology, multi-stakeholder engagement, agile integration with regulatory validation, systematic risk analysis, transparent AI integration, and post-market learning—are not luxuries or polish. They are fundamental design requirements that enable regulatory compliance, user adoption, operational excellence, and ultimately, the realization of AI's potential in advancing human health.

Organizations that approach UX as a regulatory and strategic imperative, embedding clinician and patient input from discovery through post-market surveillance, will define this emerging market. The market opportunity is substantial: $15.46 billion by 2034 with accelerating adoption globally.

The competitive advantage belongs to companies that recognize sophisticated AI is table stakes, while thoughtful, evidence-based, user-centered design is the actual differentiator. Organizations that invest in comprehensive UX design processes—grounded in human-centered principles, validated through formal testing, and continuously improved through post-market surveillance—will capture disproportionate market share and deliver products that genuinely improve human health.

Glossary

Activation Friction: The resistance or difficulty users encounter when initiating use of a product or feature, measured by steps required, cognitive load imposed, and decision points encountered before achieving initial value.

Cognitive Load: The total mental effort required to use a system, encompassing working memory demands, decision-making complexity, and attention requirements that can impair performance when excessive.

Formative Evaluation: Rapid, iterative usability testing conducted throughout development to identify and fix design problems early, distinguishing it from summative validation which demonstrates regulatory compliance at project end.

Friction Scoring: A UX methodology quantifying interaction difficulty by measuring steps, cognitive load, and decision points required to complete tasks, used to deliberately introduce protective friction in high-risk workflows while minimizing it elsewhere.

Human-Centered Design (HCD): A design philosophy and methodology that positions user needs, capabilities, and contexts as the primary drivers of design decisions, involving users as co-creators throughout discovery, design, validation, and improvement phases.

Information Architecture: The structural design of information environments encompassing organization schemes, navigation systems, labeling conventions, and search mechanisms that enable users to find, understand, and use complex information effectively.

Mental Models: The internal cognitive representations users develop of how systems work, what capabilities exist, and what actions produce desired outcomes, with design effectiveness depending on alignment between system behavior and user mental models.

Model Drift: The degradation of machine learning model performance over time as statistical properties of input data change, requiring continuous monitoring and periodic retraining to maintain accuracy and safety in production environments.

Participatory Design: A design methodology treating users as active co-creators rather than passive feedback providers, involving them in problem definition, solution generation, and validation to surface insights invisible to design professionals.

Progressive Disclosure: An information architecture pattern presenting critical information immediately while making supporting details available on demand through drilling or expansion, reducing cognitive load while maintaining access to granular data for expert users.

Retention Curve: A graph showing what percentage of users continue using a product over time, with steep early declines indicating onboarding problems and gradual long-term declines suggesting inadequate ongoing value delivery.

Role-Based Access Control (RBAC): A security model restricting system access based on user roles rather than individual permissions, simplifying administration while ensuring users access only data and functions appropriate to their responsibilities.

Summative Validation: Comprehensive pre-launch testing with predefined success criteria demonstrating regulatory compliance and safe operation, distinguished from formative evaluation by its rigor, documentation requirements, and regulatory submission purpose.

Use-Related Risk Analysis (URRA): A systematic process identifying how users might interact with systems in ways that cause harm, quantifying risk through severity and probability assessment, and driving design prioritization based on consequence severity rather than feature complexity.

Usability Debt: The accumulated cost of usability problems left unaddressed during development, increasing over time through user frustration, error rates, support costs, and eventual redesign requirements if not systematically resolved.

References

Authoritative Institutions and Research Bodies Cited:

Agency for Healthcare Research and Quality (AHRQ)

Baymard Institute

FDA (Food and Drug Administration)

Gartner

Harvard Business Review (HBR)

Healthcare Information and Management Systems Society (HIMSS)

McKinsey & Company

MIT Computer Science and Artificial Intelligence Laboratory

MIT Technology Review

National Institute of Standards and Technology (NIST)

National Institutes of Health (NIH)

Nielsen Norman Group

NovaOne Advisor

Precedence Research

Stanford Center for Biomedical Informatics

Stanford Data Science Institute

Stanford HCI (Human-Computer Interaction)

Transparency Market Research

Note: This article synthesizes research from peer-reviewed studies, regulatory guidance documents, industry reports, and authoritative UX research institutions to provide evidence-based recommendations for life science AI SaaS product development.