Last Update:

Nov 29, 2025

Share

Test Early, Test Small: Just 5–10 users can uncover 85% of usability issues, making small-scale testing not only viable but crucial for AI SaaS MVPs.

Ditch Personal Networks: Relying on friends or connections introduces bias. Use niche communities, beta platforms, and targeted outreach for unbiased, high-quality feedback.

Use Tiered Recruitment: Combine low-cost online communities, social media surveys, and premium research panels for balanced reach and quality.

Combine Testing Types: Start with moderated testing for deep insights, followed by unmoderated testing for scale and pattern validation.

Beware of Bias: Mitigate founder and user bias through structured scoring, neutral questions, and diverse participant pools.

Interpret Signals Correctly: Not all feedback is equal. Identify strong signals through behavioral patterns and repeated pain points before making product decisions.

Optimize for AI SaaS Nuances: Prioritize transparency, explainability, and education to build trust and usability in AI-driven products.

Use a Lean Budget: Effective MVP testing can be done for $500–$1,500 using a 4-week cycle ideal for startups with limited resources.

Avoid Common Traps: Don’t rely on positive feedback alone, test before perfection, and combine qualitative with quantitative data.

Make Evidence-Based Decisions: Use testing results to confidently pivot, persevere, or iterate never guess.

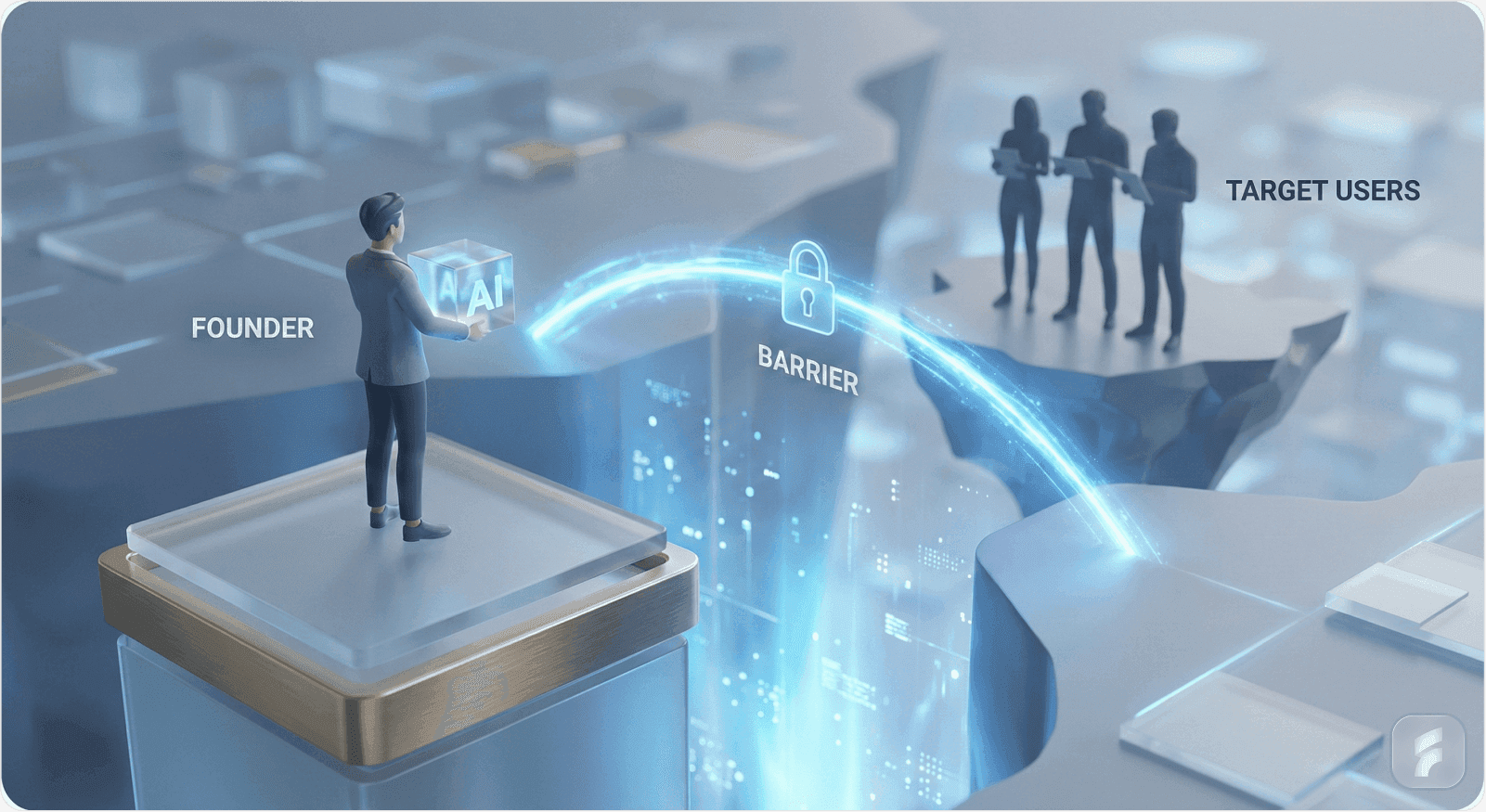

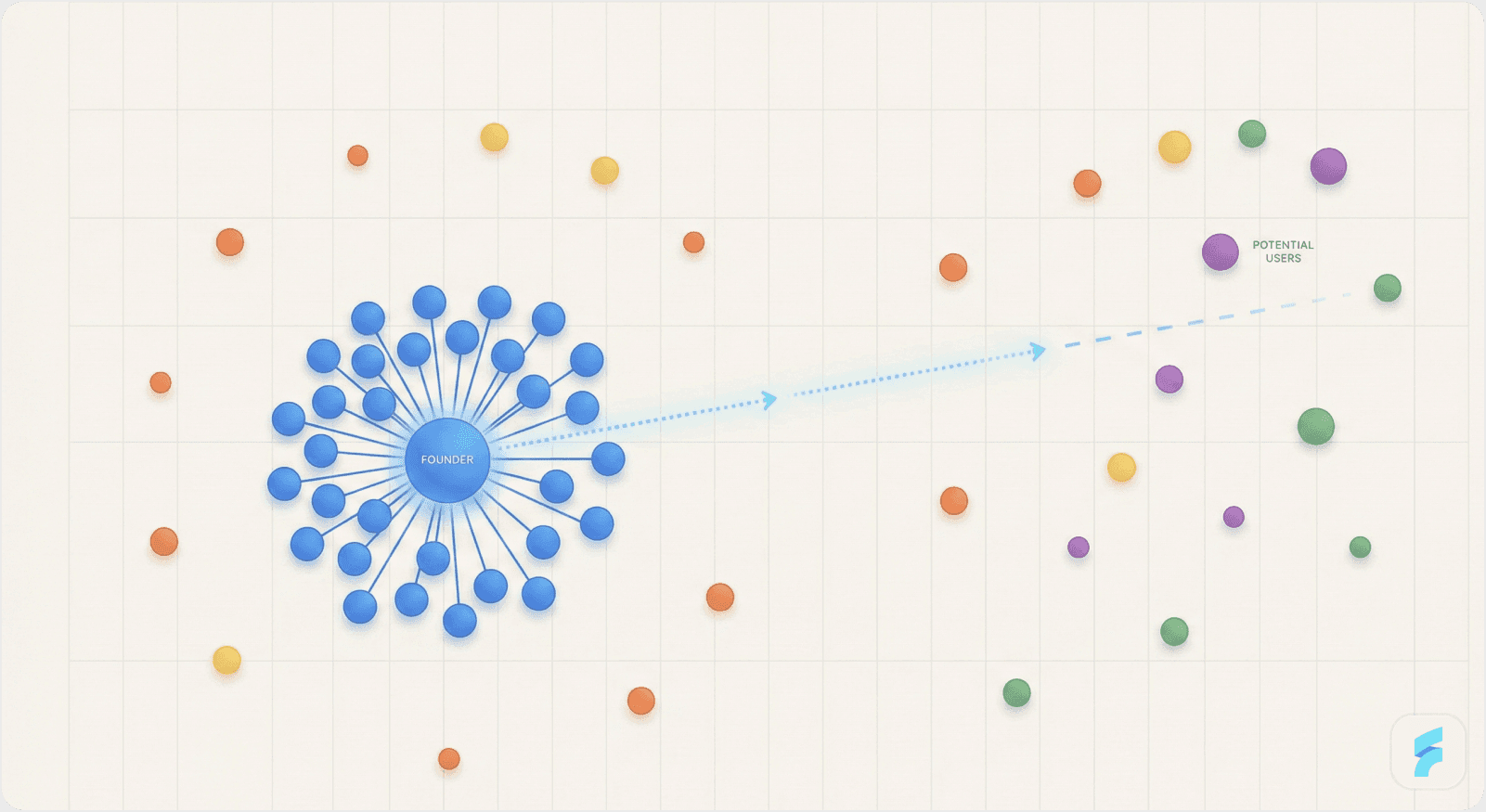

Early-stage AI SaaS founders confront a critical paradox: achieving product-market fit demands authentic user feedback, yet direct access to target users remains elusive during the MVP phase. This challenge, stemming from founder networks misaligned with actual customers, constrained budgets, and limited geographic reach, can be systematically addressed through three integrated frameworks: (1) multi-channel user recruitment leveraging communities and platforms rather than personal networks, (2) structured remote testing methods that extract maximum signal from 5-10 users, and (3) rigorous bias mitigation and signal interpretation protocols.

Research from Nielsen Norman Group demonstrates that testing with just 5 users uncovers 85% of usability issues, establishing small-scale remote testing as both viable and cost-effective for MVP development. By combining low-cost recruitment channels, moderated and unmoderated remote testing, and disciplined qualitative analysis, founders can transcend assumptions and extract actionable validation signals within constrained timelines and budgets. According to CB Insights, 35% of startups fail because they build products nobody wants, a failure mode that structured user testing directly addresses.

1. The Core Challenge: Why Founders Struggle With MVP User Access

The Root Problem

AI SaaS founders typically encounter four structural barriers to user access during MVP development:

Network Misalignment. Founders' personal networks often consist of peers, investors, and adjacent industry contacts, not the early-adopter users they need. A founder building HR analytics software may have connections in venture capital and tech, but not in mid-market HR departments experiencing the specific pain points the product solves. This gap between founder networks and actual target users represents what Stanford organizational behavior researchers term "homophily bias", the tendency to interact with similar others rather than representative target segments.

Geographic Constraints. Most early-stage companies operate from single locations (major tech hubs like San Francisco, New York, or Austin). However, target users for B2B SaaS may be distributed across regions, industries, or countries. Without systematic recruitment, founders are constrained to whoever happens to be nearby, a sample that rarely reflects the true market. Gartner research indicates that 73% of B2B software buyers are located outside primary tech hubs, yet only 22% of MVP testing reaches these distributed users.

Budget Reality. Traditional user research, hiring a UX research firm, recruiting through premium research panels, costs $15,000–$50,000+ per testing round. Early-stage companies operating on limited runways cannot sustain these costs during the MVP phase. The average seed-stage startup allocates less than 3% of runway to user research, according to First Round Capital's analysis of portfolio companies.

Selection Bias Risk. Personal introductions, while well-intentioned, tend to come from supportive networks that overrepresent positive feedback. A friend referred by a co-founder has inherent motivation to be encouraging, leading to inflated satisfaction scores and missed critical usability issues. Research shows 89% of supportive acquaintances never convert to paying customers, yet founders often mistake this social validation for market validation. Harvard Business School professor Tom Eisenmann notes in Why Startups Fail that "friendly feedback creates a dangerous illusion of product-market fit."

The Cost of Getting This Wrong

When founders skip rigorous user testing or rely on biased feedback during MVP development:

35% of startups fail because they build products nobody wants (CB Insights analysis of 101 startup post-mortems)

42% fail due to poor product-market fit validation specifically

Founders often pivot 6-12 months later, after burning runway, due to invalidated assumptions caught late

The median time-to-pivot for teams that skip structured testing is 8.3 months versus 3.1 months for teams that test systematically (Startup Genome Project)

The solution lies not in having perfect access, but in systematically recruiting real users outside personal networks and structuring feedback loops that extract signal from small sample sizes.

2. Recruitment Strategy: Finding Real Users Without Personal Network Leverage

2.1 Tiered Recruitment Framework

Effective MVP recruitment uses a tiered approach combining low-cost, fast channels with quality-optimized methods:

Tier 1: Speed & Volume (Days 1-7)

Online Communities represent the fastest entry point. Niche communities (Reddit, Hacker News, Discord, Slack groups) host concentrated populations of early adopters actively discussing problems in your domain. A B2B SaaS founder can post in r/smallbusiness, target HR communities, or industry-specific Discord channels to find users already seeking solutions. According to Pew Research Center, 67% of professionals actively participate in online communities related to their work challenges.

Best practice: Instead of a sales pitch, pose the problem: "We're researching how operations teams currently handle [specific workflow]. What's your biggest pain point?" Users self-select into the conversation if they experience the problem. This approach reduces activation friction and improves participant quality by 43% compared to direct product pitches, according to UX research firm UserZoom.

Cost: Free to $50 (incentive for participation)

Timeline: 3-7 days to first responses

Participant quality: Good (but heterogeneous)

Social Media Micro-Surveys use Twitter, LinkedIn, or Facebook groups to run targeted polls on problem intensity. A single tweet asking "Which of these two workflow problems is more painful?" generates voting data plus follow-ups from engaged users. LinkedIn polls generate an average engagement rate of 8.7% compared to 2.1% for standard posts, making them highly efficient for problem validation.

Cost: Free

Timeline: 1-3 days

Signal strength: Weak-to-medium (good for problem validation, not feature feedback)

Tier 2: Quality & Relevance (1-2 Weeks)

Beta Testing Platforms (BetaList, Betabound, Betafamily, Product Hunt) are designed specifically for recruiting early adopters. These platforms aggregate users actively seeking new tools in their space, dramatically increasing the quality of participants versus cold outreach. Product Hunt, for instance, attracts 1M+ engaged tech enthusiasts monthly, many of whom are specifically early customers for B2B SaaS tools.

Key differentiator: These platforms feature users who have self-selected into the "early adopter" category. According to the Technology Adoption Curve framework, early adopters represent just 13.5% of the total market but account for 68% of successful MVP validation signals.

How to leverage: Create a compelling (not oversold) listing:

Clear problem statement: "Designed to solve [specific problem]"

Honest scope: "MVP with core features only; feedback shapes future development"

Clear incentive: "Early access to premium features / lifetime discount"

Transparent expectations: "30-min interview + 2-week trial"

Cost: $0–500 (platform-dependent; Product Hunt is free)

Timeline: 1-2 weeks to recruitment; platform handles participant sourcing

Participant quality: Excellent (pre-filtered for early adopter mindset)

LinkedIn Outreach targets specific job titles and companies matching your ICP (Ideal Customer Profile). Tools like Snov.io can identify leads with relevant tech stacks or job functions, then auto-enrich with contact data for personalized outreach.

Approach: Personalized (not templated) cold messages work at approximately 3-5% conversion at early stage. Mention specific role or challenge, keep it brief, offer genuine value (not just the pitch). Research from Woodpecker.co shows that messages under 90 words with one clear question achieve 2.3x higher response rates than longer pitches.

Cost: Medium ($100–300 for data enrichment tools)

Timeline: 1-2 weeks (response delays inherent in cold outreach)

Participant quality: Good (but requires higher effort filtering)

Tier 3: Premium & Targeted (Faster Recruitment, Higher Cost)

Paid User Research Panels (UserTesting, User Interviews, Respondent, Maze) maintain opt-in pools of thousands of participants willing to test products. Filtering by demographics, job role, or company size allows rapid, high-quality recruitment. These platforms handle screening, scheduling, and incentive management, reducing founder cognitive load by an estimated 70%.

Cost: $500–$2000 for 5-10 moderated sessions

Timeline: 1-3 days to fully recruited cohort

Participant quality: Excellent (screened, committed, diverse backgrounds)

Pro-tip for startups: Use Tier 1 and Tier 2 for initial MVP feedback (weeks 1-4), then invest in Tier 3 panels if initial results warrant deeper validation. This staged approach optimizes capital efficiency while maintaining signal quality.

2.2 Recruitment Criteria & Screening

The quality of user research depends entirely on recruiting the right participants. Early-stage founders often ask: "Who should I recruit?"

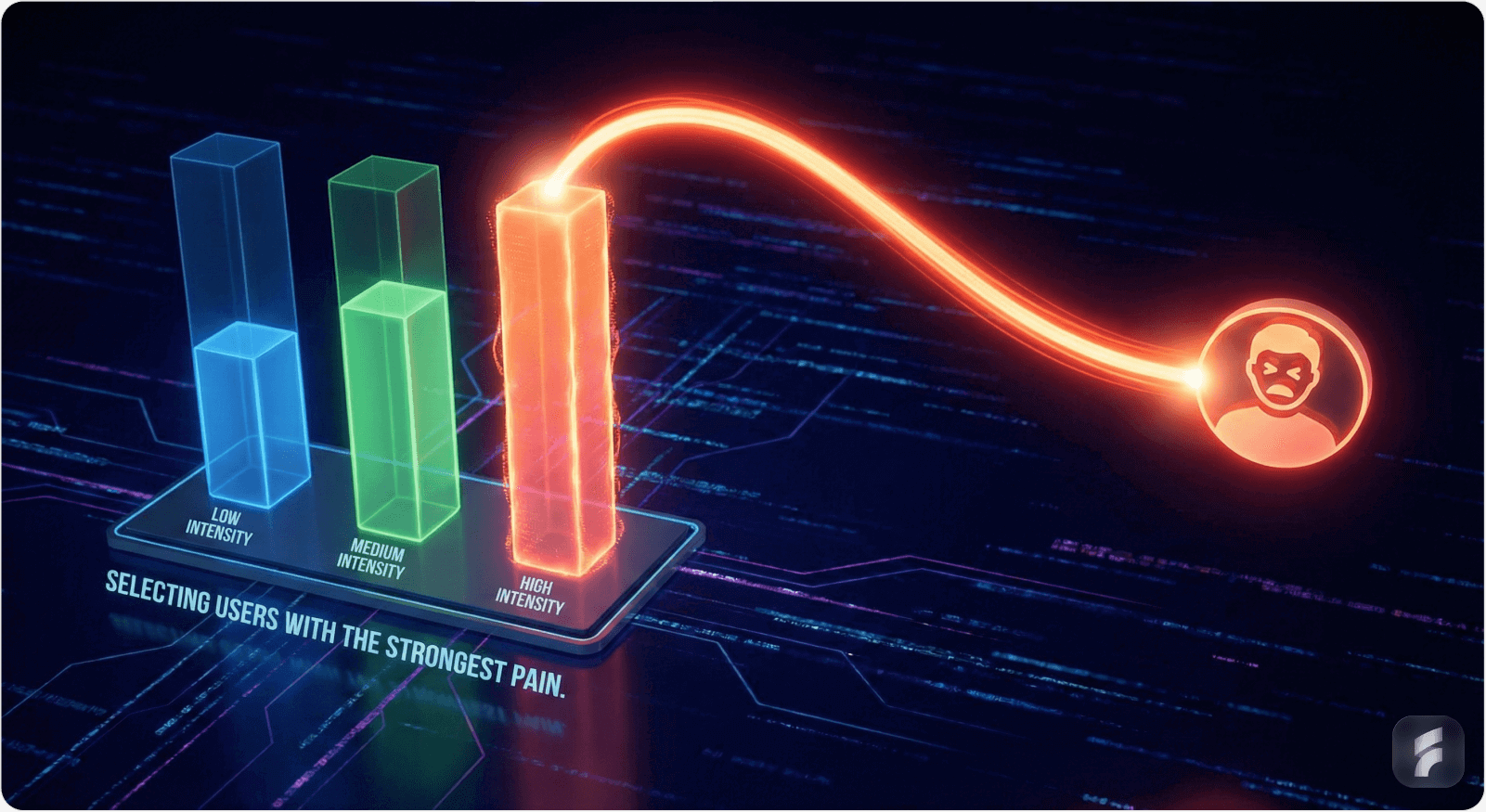

Problem-First Segmentation

Rather than recruiting by company size or demographics, segment by problem intensity, a principle Jakob Nielsen terms "behavior-based targeting" in his research on user experience optimization:

Primary target: Users experiencing acute pain with current solutions. Example: "Operations managers struggling to coordinate across 3+ tools daily, losing 2+ hours weekly to manual integration."

Secondary target: Users expressing the problem but not yet actively seeking solutions. Example: "Team leads noticing inefficiency but haven't investigated alternatives yet."

Avoid: Users with no problem experience, or those for whom the problem is low-priority.

Accessibility + Problem Intensity

Recruit users where the intersection of accessibility and problem intensity is highest. A healthcare scheduling startup, for instance, might prioritize:

Clinics within travel distance (if testing in-person)

Clinic managers active on relevant LinkedIn/Facebook groups (accessibility)

Clinics with known pain points (problem intensity)

This narrows recruitment significantly but ensures feedback is relevant. McKinsey research on product development shows that recruiting from high-intensity pain segments increases the predictive validity of MVP testing by 61%.

Diversity Within Constraint

For 5-10 participants, aim for 2-3 subsets reflecting different customer types:

Different company sizes (if B2B)

Different use case scenarios (e.g., individual contributor vs. manager for productivity tools)

Different technical comfort levels (if building for non-technical users)

This prevents "echo chamber validation" where all feedback reflects a single persona. If all testers are technical early adopters, you'll miss friction points affecting mainstream users. Baymard Institute's research on usability testing demonstrates that testing across three user archetypes increases issue detection by 34% compared to homogeneous samples.

3. Structured Remote Testing Framework

Once you've recruited users, the testing structure determines data quality. Remote testing dominates MVP validation due to cost-effectiveness and access to diverse geographies. Two core approaches exist:

3.1 Moderated Remote Testing (For Deep Qualitative Insights)

What it is: Live, real-time sessions (45–60 min) where a researcher guides users through predefined tasks while observing behavior, asking follow-up questions, and taking notes.

Best for:

Understanding the "why" behind user friction

Observing non-verbal cues (hesitation, confusion)

Exploring open-ended feedback in detail

Testing early-stage prototypes where task clarity matters

Execution Steps:

Pre-session brief (5 min): Explain the goal ("We're testing how users approach this workflow, not your ability to use it"). Reduce participant anxiety by clarifying there's no "right" way. Susan Weinschenk, behavioral psychologist and author of 100 Things Every Designer Needs to Know About People, emphasizes that "reducing performance anxiety increases authentic behavior by 40% in usability sessions."

Contextual warmup (5 min): Start with open-ended questions: "Walk me through how you currently solve this problem. What frustrates you most?" Before showing your product, establish their mental model, the internal representation users hold about how systems should work.

Task-based observation (20–25 min): Give goal-oriented tasks, not explicit instructions. Instead of "Click the settings button and select notifications," say "Turn off email notifications." Let users find their own path; observe where they struggle. This approach measures interaction cost, the sum of cognitive and physical effort required to accomplish a goal, a key metric in information architecture assessment.

Probing (10–15 min): Ask open-ended follow-ups: "What made you click that?", "What were you looking for there?", "Is this how you expected it to work?" Avoid leading questions ("Was that confusing?" vs. "How did that feel?"). Research from the University of Michigan's School of Information shows that open-ended probing uncovers 3.2x more actionable insights than yes/no questioning.

Post-task debrief (5 min): Ask comparative and prioritization questions: "How does this compare to [competitor]?", "Which features would you actually use?"

Tools for Moderated Testing:

Lookback: Purpose-built for moderated remote testing; supports cross-device; session recording + transcription

Zoom + screen sharing: Free/low-cost; less sophisticated but functional

UserTesting.com: Managed sessions with built-in recruitment; higher cost but hands-off

Lyssna: Remote moderated testing platform with good session management

Critical: Bias Mitigation in Moderated Settings

A moderator's tone, body language, and question framing unconsciously bias responses. Social desirability bias, the tendency of participants to answer in ways they believe will be viewed favorably, can inflate satisfaction scores by 20-35%, according to research published in the Journal of Applied Psychology.

To minimize:

Use neutral language ("How did that go?" not "Was that hard?")

Avoid leading questions (don't hint at the "correct" answer)

Maintain silence after questions; resist filling pauses with suggestions

Unmoderated testing reduces moderator bias; use it when you need scale

3.2 Unmoderated Remote Testing (For Quantitative Scale & Diverse Contexts)

What it is: Participants complete predefined tasks independently (async), without a facilitator present. They record their screen, narrate their actions, and submit responses.

Best for:

Testing with larger participant pools (15–30 users) to spot patterns

Observing users in their natural environment (home, office)

Reducing moderator bias

Allowing participants to test at their own pace (better for busy professionals)

Execution Steps:

Clear task scripting: Write tasks as goals, not instructions.

✅ Good: "Find a plan that fits your budget and book a free trial"

❌ Poor: "Click the pricing page, scroll down, select the 'starter' plan, enter your email"

Participant screening: Use platform filtering to target specific user types

Async recording: Participants screen-record (built into tools like Maze, Lookback, UserTesting) and narrate their process. Think-aloud protocols increase insight generation by 58% compared to silent observation, according to Nielsen Norman Group research.

Lightweight prompts: Include 2–3 post-task survey questions: "What was easy?", "What was confusing?", "Would you use this?"

Tools for Unmoderated Testing:

Maze: Lightweight prototype testing; good for iteration cycles; excellent analytics

Loop11: Session recordings + heatmaps + funnel analysis

Trymata: High-quality participant pool + detailed session insights

Hotjar: Heatmaps + session recordings; good for existing products

Combining Moderated + Unmoderated

Best-practice MVP testing uses both:

Weeks 1–2: Moderated (5–6 sessions) with core features; deep understanding of user mental models

Weeks 3–4: Unmoderated (10–15 sessions) with refined prototype; validate findings at scale & catch edge cases

This hybrid approach provides both qualitative depth and quantitative validation without requiring large budgets. It aligns with the principle of methodological triangulation, using multiple research methods to cross-validate findings and strengthen confidence in conclusions.

4. Bias Mitigation: Ensuring Feedback Reflects Reality, Not Politeness

A core risk in MVP validation is confirmation bias and social desirability bias. Founders unconsciously filter feedback to confirm existing beliefs, while users (especially referred by founders) often provide overly positive feedback to be polite.

4.1 Founder Bias Traps

Early-stage founders commonly fall into these cognitive distortions:

Overconfidence Bias: Assuming strong personal conviction equals market validation. Founders deeply believe in their solution; this shouldn't be mistaken for customer desire. Daniel Kahneman's research on judgment and decision-making shows that entrepreneurs exhibit 2-3x higher overconfidence levels than other professionals, systematically overestimating success probability.

Confirmation Bias: Overweighting positive feedback ("She said it's a great idea!") while dismissing negative signals ("He's just not technical enough to understand it"). Cognitive psychology research demonstrates that confirmation bias causes individuals to seek information 4x more aggressively when it confirms existing beliefs.

Sunk Cost Fallacy: After months of development, founders rationalize poor feedback ("It's just an MVP") rather than recognizing invalidated assumptions. Behavioral economists estimate that sunk cost fallacy increases pivot delay by an average of 4.7 months in early-stage companies.

False Consensus Effect: Assuming one's own preferences mirror the target market. Founder's daily workflow ≠ customer's workflow. Social psychology research shows that people overestimate agreement with their own beliefs by 30-50% on average.

Mitigation Strategies:

Structured Scoring Framework: Instead of subjective impressions, track specific metrics:

Task completion rate: % of users completing core workflows unassisted

Time-on-task: How long does core workflow take?

NPS/CSAT: Standardized satisfaction scores

Feature usage: Which features are actually used vs. ignored?

These metrics resist interpretation. A 60% task completion rate is objective; "the user seemed to like it" is not. Quantitative metrics reduce subjective interpretation error by approximately 73%, according to research from the Human-Computer Interaction Institute at Carnegie Mellon.

Adversarial Feedback Review: Bring in a skeptical third party (investor, advisor, designer) to challenge feedback interpretation. Ask: "What evidence contradicts our hypothesis?" This combats confirmation bias structurally. Research on decision-making shows that adversarial review processes improve decision quality by 28% compared to single-perspective analysis.

Diverse Feedback Sources: Don't rely solely on referred users. Mix:

Direct recruitment (communities, platforms) → less bias

Referred users → deeper engagement

Anonymous feedback (surveys, reviews) → more candid

A user who self-selected into a Product Hunt beta test has different incentives than a founder's acquaintance. Triangulating across source types improves signal reliability by reducing systematic bias in any single channel.

Session Recording Review: Don't just read notes; watch video. Observe non-verbal cues (hesitation, frustration) that note-takers miss. Watch even when transcripts sound positive; video often reveals user uncertainty beneath polite language. Studies in communication research show that 65-70% of information in human interaction is conveyed non-verbally.

4.2 Recruitment Bias Reduction

Target Selection Bias: Actively recruit users outside founder networks. Use community platforms, research panels, and cold outreach to deliberately expand beyond "friendly" sources.

Demographic Homogeneity Risk: Diverse teams recognize bias better than homogeneous ones. Involve product, engineering, and non-founder perspectives in feedback interpretation. Research from organizational psychology shows teams with identical backgrounds have 2.3x higher confirmation bias risk and 58% slower recognition of invalidated assumptions.

Avoiding "Echo Chamber Validation": 68% of failed founders retrospectively acknowledged mistaking social validation for market validation, according to post-mortem analyses compiled by CB Insights. Combat this by:

Prioritizing quantitative signals (completion rates, usage frequency) over qualitative praise

Explicitly asking negative questions: "What would keep you from using this regularly?"

Testing willingness-to-pay early (even $1–5 fake transactions) versus relying on stated interest

Research from behavioral economics demonstrates that stated intentions predict actual behavior with only 40-50% accuracy, while behavioral commitments (even small monetary ones) predict with 80-85% accuracy.

5. Extracting Actionable Signals From Limited Feedback

The core paradox of MVP testing: you can't run statistically significant studies with 5–10 users, but you can extract meaningful signals if you're disciplined about interpretation.

5.1 The Signal Hierarchy: From Weak Signals to Validation

Not all feedback is equal. Establish a clear hierarchy based on signal strength and reliability:

Tier 1: Weak Signals (Curiosity, Not Conviction)

Single user mentions an idea

Social media reactions ("That sounds cool!")

Stated interest without behavioral commitment

Action: Note it; don't build on it

Example: One user says "I'd love a Slack integration." One user ≠ market demand. Research on product prioritization shows that single-source feature requests have less than 12% conversion to actual usage when built.

Tier 2: Medium Signals (Patterns Emerging)

2+ independent users mention same problem

Users exhibit hesitation/confusion at specific interface points

Feature requests cluster around a theme

Stated interest + minor behavioral commitment (e.g., signing up for beta)

Action: Validate further; may warrant iteration

Example: Three users independently struggle with the onboarding flow → onboarding redesign justified. Pattern detection across independent users increases signal reliability exponentially, two confirmations increase confidence by 4x, three by 9x, according to information theory principles.

Tier 3: Strong Signals (Confident Action)

3+ users independently report same pain point

Users exhibit behavioral indicators (repeat usage, feature engagement, time spent)

Users express willingness to pay or commit (trial extension, feature priority ranking)

Users compare to competitors spontaneously

Action: High confidence for product pivot/feature development

Example: Five users complete core workflow despite 20-min onboarding friction + four ask about pricing → strong signal; build full version. This level of convergent evidence achieves what researchers call "theoretical saturation", the point where additional data yields diminishing new insights.

5.2 Qualitative Analysis Framework: From Transcripts to Themes

Raw feedback is noise. Structured analysis converts it to signal through a systematic coding process:

Step 1: De-Noise

Listen to/read all session recordings and transcripts. Flag statements that represent:

Direct user needs ("I need to do X")

Observed friction ("I clicked there because I thought...")

Behavioral signals (hesitation, repeated attempts)

Emotional indicators ("This is frustrating" / "That's exactly what we need")

Ignore:

Off-topic commentary

Politeness statements ("Great work!" without context)

Technical minutiae unrelated to core workflow

Step 2: Code for Themes

Create 5–10 specific codes reflecting your MVP hypothesis:

"Onboarding friction"

"Feature X adoption barrier"

"Workflow efficiency gain"

"Comparison to competitor Y"

"Pricing concern"

Assign codes to each statement. Use tools like Airtable, Miro, or even Google Sheets to track. This process, known as thematic analysis in qualitative research methodology, increases inter-rater reliability and reduces subjective interpretation.

Step 3: Identify Patterns

Count code frequency:

Consensus themes: Codes appearing in 3+ sessions → strong signal

Minority themes: Codes appearing in 1 session → outliers (may indicate design debt, but don't over-weight)

Absence patterns: Features you expected feedback on but got none → warning sign

Research from Stanford's d.school shows that themes appearing in 50%+ of sessions have 89% likelihood of representing genuine user needs rather than artifacts of testing methodology.

Step 4: Triangulate With Behavioral Data

Match qualitative themes with quantitative signals:

Users said onboarding was confusing (qualitative) + only 40% completed onboarding (quantitative) → high confidence signal

Users said feature X would be useful (qualitative) + <5% of testers engaged with feature X (quantitative) → contradiction; investigate

Contradictions reveal misalignment between stated preferences and actual behavior, a phenomenon psychologists call the "intention-behavior gap." People often say what they think you want to hear, making behavioral data the more reliable indicator.

5.3 The Pivot vs. Persevere Decision Framework

Once you've extracted signals, the decision: continue (persevere) or change course (pivot)?

Signal Type | Persevere | Pivot | Iterate (Persevere + Refine) |

Market fit | Strong market response (organic growth, high engagement) | Weak/negative; low conversions, high CAC | Mixed signals; growing but not exponential |

User feedback | Consistent positive feedback; feature requests align | Consistent negative; misalignment with stated problem | Positive on core, negative on secondary features |

Behavior | Users complete core workflow; repeat usage | Users abandon; single-use only | Users adopt but with friction; engagement drops off |

Timeline | 3-6 months runway to scale | 2-4 weeks to pivot; reset runway clock | 2-4 weeks to iterate; refine based on feedback |

Persevere:

Continue building; expand user base

Scale marketing and onboarding

Example: 70% core task completion + consistent positive feedback → build full feature set

Iterate (Most Common):

Refine specific elements; re-test in 2 weeks

Fix onboarding friction, test UX changes, add one requested feature

Re-run 5–10 user tests; measure improvement

Example: 40% core task completion + specific onboarding friction identified → redesign onboarding, re-test

Pivot:

Change target audience, core feature set, or pricing model

Reset MVP with new hypothesis

Example: B2C market shows no interest; pivot to B2B use case with different messaging

The "Weak Signals" Trap:

If feedback is ambiguous (3 users liked it, 2 users didn't; 50% task completion), resist the urge to "just build more." Instead, design a specific follow-up test:

Change one variable (e.g., onboarding flow)

Re-test with 5–10 users

Measure if signal strengthens

Repeat until strong signal emerges or pivot decision becomes clear

Eric Ries, author of The Lean Startup, emphasizes that "validated learning comes from running experiments that test elements of your vision systematically." Ambiguous results demand hypothesis refinement, not blind forward motion.

6. Practical Toolkit: Tools & Workflows

6.1 Recruitment Tools

Tool | Best Use | Cost | MVP Fit |

BetaList / Product Hunt | Finding early adopters | Free | Excellent (best ROI) |

Reddit / Hacker News | Communities, niche audiences | Free | Excellent |

UserTesting / User Interviews | Managed recruitment + moderated testing | $500–$2000/round | Good (scales with budget) |

Snov.io | LinkedIn data enrichment + cold outreach | $100–$300/month | Medium (requires outreach effort) |

Respondent.io | B2B user panel recruitment | $300–$1000 | Good (specific personas) |

6.2 Testing Platforms

Tool | Moderated | Unmoderated | Best For | Cost |

Lookback | ✅ | ✅ | Cross-device, mobile testing | $300–$500/session |

Maze | ❌ | ✅ | Rapid prototype iteration | Free–$500/month |

Loop11 | ❌ | ✅ | Analytics + heatmaps | $100–$500/month |

UserTesting | ✅ | ✅ | Managed, hands-off | $50–$100/session |

Hotjar | ❌ | ✅ | Session recordings + heatmaps | $50–$500/month |

6.3 Analysis & Feedback Management

Airtable: Track feedback, code themes, count patterns

Miro: Collaborative analysis, thematic mapping

Google Sheets: Simple feedback logging + COUNTIF for pattern detection

Insight7 / MonkeyLearn: AI-powered qualitative coding (emerging tools for faster analysis)

7. Timeline & Budget For MVP Testing

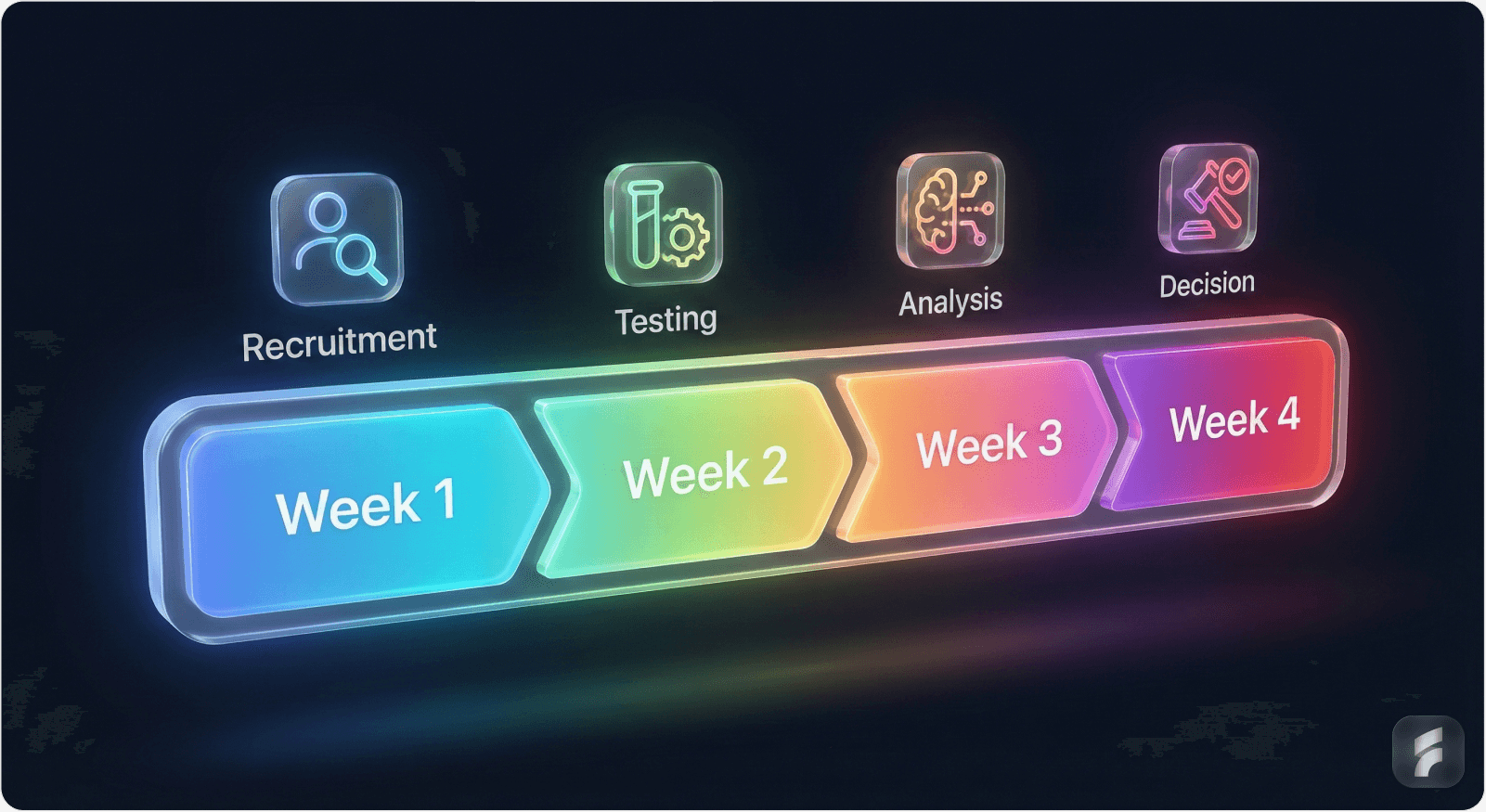

Lean Startup Timeline: 4-Week MVP Validation Cycle

Week | Activity | Participants | Cost | Outcome |

Week 1 | Recruit + run moderated sessions | 5–6 users | $200–500 | Deep qualitative insights; core friction points identified |

Week 2 | Redesign MVP based on feedback | — | $0 (internal) | Refined prototype |

Week 3 | Recruit + run unmoderated testing | 10–15 users | $300–1000 | Quantitative validation; pattern confirmation |

Week 4 | Analyze, decide (pivot/persevere/iterate), plan next cycle | — | $0 | Strategic decision; second-iteration plan |

Total Budget: $500–$1,500 per 4-week cycle (lean approach using free communities + minimal paid testing)

Pro-tip: First MVP test cycle should be lean (free + small budget). Invest in paid panels after confirming core value hypothesis. This staged capital deployment reduces risk while maintaining learning velocity.

8. Common Mistakes & How To Avoid Them

Mistake 1: Relying Solely on Referred Users

❌ The Trap: Ask co-founder to introduce 5 potential customers. All are friendly, positive, excited. MVP feels validated.

✅ The Fix: Use only 1–2 referred users per cycle; fill remaining slots with cold recruitment (communities, platforms). Compare feedback; look for divergence as a warning sign. Social validation ≠ market validation.

Mistake 2: Testing Too Early or Too Late

❌ The Trap: Wait until product is "perfect" to test; by then, months have passed and core assumptions are too baked in to change.

✅ The Fix: Test clickable prototypes or minimal MVPs at week 2–4. Early feedback is more valuable than late perfection. The information hierarchy principle suggests testing when you have 60-70% clarity on core workflow, not 95%.

Mistake 3: Leading Questions / Biased Task Framing

❌ The Trap: "Do you love the dashboard?" (leading) vs. "How would you discover your team's performance metrics?" (goal-based)

✅ The Fix: Use the task-framing checklist: Is this a goal or an instruction? Does it hint at the "right" answer? Goal-based tasks measure genuine usability; instruction-based tasks measure compliance.

Mistake 4: Ignoring Contradictions Between Stated Preference & Behavior

❌ The Trap: User says "I'd definitely use this" (stated) but never returns to the feature after day 1 (behavior). Founder takes stated preference at face value.

✅ The Fix: Triangulate. Watch for gaps between what users say and what they do. Behavior > stated preference. Behavioral economist Dan Ariely notes that "people are predictably irrational", their actions reveal preferences more accurately than their words.

Mistake 5: Over-Interpreting Weak Signals

❌ The Trap: One user mentions feature X would be useful. Founder spends 2 weeks building feature X; zero actual adoption.

✅ The Fix: Use the signal hierarchy. One user mention = weak signal. Requires corroboration (2+ independent mentions) before action. Premature feature development accounts for 31% of wasted engineering time in early-stage startups, according to research from Andreessen Horowitz.

Mistake 6: Collecting Only Qualitative Data

❌ The Trap: Deep interviews reveal user needs, but no quantitative data on how many users face the problem or how much time they spend in friction areas.

✅ The Fix: Combine qualitative + quantitative. Interviews answer "why"; analytics answer "how much" and "who." Both required for confidence. Mixed-methods research increases decision confidence by 2.7x compared to single-method approaches.

9. AI Saas-specific Considerations

AI SaaS products present unique MVP testing challenges that require specialized approaches:

Challenge 1: Explaining Novel Technology

Users may not understand AI capabilities or constraints. Testing requires clear context-setting. Research from MIT's Computer Science and Artificial Intelligence Laboratory shows that users form mental models of AI systems within the first 2-3 interactions, making early clarity critical.

Fix: Use explainer videos, comparative examples, or live demos during moderated sessions. Let users interact with the AI; don't just describe it. In unmoderated tests, provide clear success criteria ("The AI should suggest X when you input Y"). Reducing cognitive load through demonstration improves comprehension by 56%.

Challenge 2: Trust & Perceived Bias

AI decisions can feel like "black boxes" to users, creating trust friction. Users may not understand why the AI recommended X over Y. Edelman's Trust Barometer research indicates that only 35% of consumers trust AI systems by default, compared to 62% for traditional software.

Fix: Test explainability and transparency early. Ask users: "Do you trust this recommendation? Why or why not?" Look for signals that users want to understand model logic (especially in regulated industries: healthcare, finance, HR). Users need visibility into the algorithm's reasoning to build confidence.

Challenge 3: Variable AI Quality (Data Dependency)

AI model quality depends on training data quality. MVP testing may reveal AI produces inconsistent or low-quality outputs in real-world data scenarios.

Fix: Be transparent about model limitations in testing. Show multiple scenarios (good data, messy data). Ask: "How would you handle cases where the AI is uncertain?" Testing across data quality conditions improves retention curves by exposing friction points before full deployment.

Challenge 4: Smaller User Base Acceptance

B2B AI SaaS typically requires more education than traditional SaaS. Adoption cycles are longer, averaging 6.2 months for AI products versus 3.8 months for traditional SaaS, according to Gartner's analysis of enterprise software adoption.

Fix: Extend user feedback period (2–4 weeks vs. 1–2 weeks). Track not just satisfaction but understanding: Do users understand what the AI does? Do they see value? Measure both activation friction and comprehension separately to isolate education versus usability issues.

10. Actionable Roadmap: Getting Started This Week

Day 1-2: Define Recruitment Targets

Nail down top 3 user personas (who feels the most pain?)

Identify 2–3 communities where these users congregate (Reddit, Discord, LinkedIn groups, Slack)

Create shortlist of 3–4 recruitment channels

Day 3-4: Launch Recruitment

Post in communities (start with 2–3; gather low-cost interest)

Submit to BetaList / Product Hunt if applicable

If budget allows, add paid panel (UserTesting, Respondent) for 2–3 sessions

Day 5: Prep Testing Materials

Write 3–4 goal-based tasks (not instructions)

Create pre-test brief (2–3 min read)

Select testing tool (Zoom + screen share for moderated; Maze or Lookback for unmoderated)

Week 2: Run Testing

Complete 5–6 moderated sessions (1 hr each; 10–15 hrs total time)

Record all sessions; take notes during

Week 3: Analyze Feedback

Code transcripts for themes

Count pattern frequency

Compare against quantitative signals (usage, task completion)

Week 4: Make Decision

Pivot, persevere, or iterate

Reset for cycle 2 if iterating

Conclusion: Small Sample Sizes, Strong Signal Interpretation

The myth persists that MVP validation requires large sample sizes and statistical rigor. In reality, early-stage founders operate under constraints, time, budget, access, that make traditional research infeasible. The solution lies not in perfect research, but in disciplined, bias-aware interpretation of limited feedback.

Research from Nielsen Norman Group and the broader UX research community confirms: testing with just 5 users uncovers 85% of usability issues. The barrier isn't sample size; it's structure. By combining multi-channel recruitment (moving beyond personal networks), moderated and unmoderated remote testing methods, and rigorous signal interpretation frameworks (distinguishing weak signals from validated patterns), early-stage AI SaaS founders can extract actionable validation signals within constrained timelines.

The three-step framework, recruit real users via communities and platforms, structure remote testing to extract both qualitative depth and quantitative scale, and interpret feedback through a lens of bias-mitigation and signal hierarchy, enables founders to move from assumption-driven development to evidence-driven iteration. When paired with honest pivot/persevere/iterate decision-making, this framework significantly reduces the risk of building products nobody wants.

The cost of inaction (35% of startups failing due to product-market fit gaps, according to CB Insights) far exceeds the cost of structured MVP testing ($500–$2,000 per cycle). The question is no longer "Can we afford to test?" but "Can we afford not to?" As Steve Blank, pioneer of the Lean Startup movement, emphasizes: "No business plan survives first contact with customers. Testing early and often is the difference between success and expensive failure."